Cloud Run SSH - computation power & on-demand

I've been working at Even for over a year now, and we use Cloud Run for everything. And I mean EVERYTHING. We use it to host our website, our backend server, our offline pipelines, our dashboards and our cron jobs.

And its been pretty incredible. By structuring all our backend to be event driven, we've managed to keep all our operations serverless, and our cloud costs extremely low. As you might know, Cloud Run charges you only for the time the server is actually processing a request, in increments of 100ms, which means that irrespective of how many services we have hosted on Cloud Run, we pay absolutely nothing for when those services are not being used.

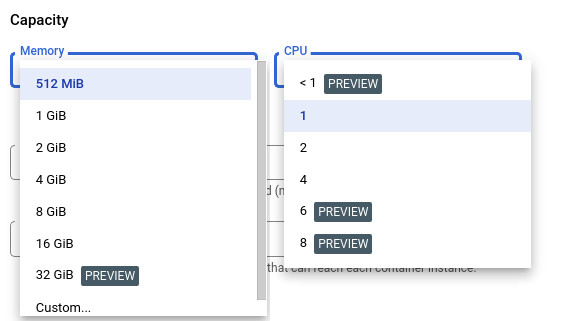

Just as a recap, Cloud Run allows you to run an abitrary docker container as your service, with basically one constraint - it must listen for HTTP requests on the PORT provided by Cloud Run. The docker container itself can have as many services running inside it (not exposed externally) or as many packages installed as you want. Cloud Run also has a LOT of RAM/CPU combinations giving you the perfect combination of on-demand and powerful.

Before deciding on a serverless architecture, we did a lot of research, and the most common complaint that we noticed from other companies who've tried this approach is that its hard to debug. Its hard to see what is happening inside the container and the Cloud Run environment could be unpredictable.

If only there were a way to SSH into a Cloud Run container and see what's happening...

The problem with SSH

SSH in itself is a TCP protocol, much like HTTP. In theory it should be possible to have a serverless SSH setup similar to a serverless HTTP service like Cloud Run. The only reason we can't do it transparently with Cloud Run is that Cloud Run must host an HTTP service at the exposed port.

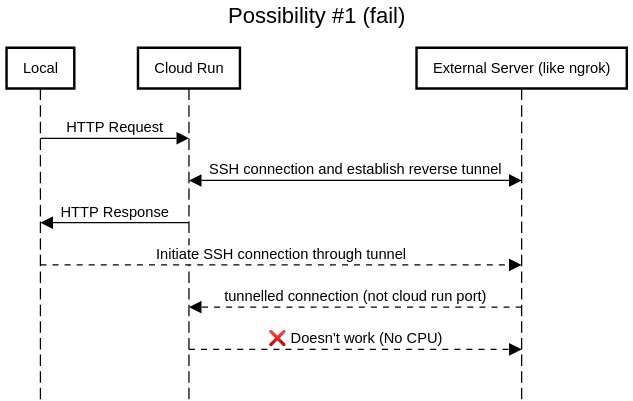

One more tricky part is that a Cloud Run container is allocated CPU only while its processing the incoming request. This means that if you were thinking something like this: it doesn't work.

Possible solutions

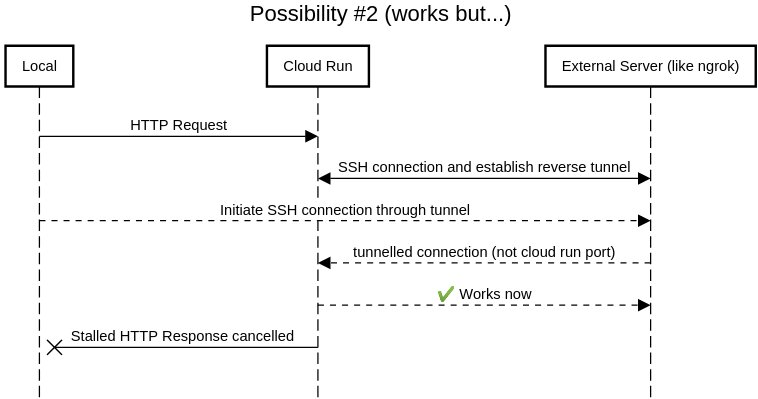

As you can possibly predict, one possible solution is to keep the cloud run machine alive forcefully by making sure the initial HTTP request is long lived. Since cloud run supports HTTP timeouts upto 60 minutes, this is actually possible.

This solution works, but has 2 drawbacks:

- You need a container that stalls infinitely on HTTP request while establishing a connection with an external server.

- You have an external dependency on an external (SSH-exposed) server like ngrok to be able to establish the SSH connection. Which means an additional hop, significantly lower bandwidth and/or additional cost.

A better solution

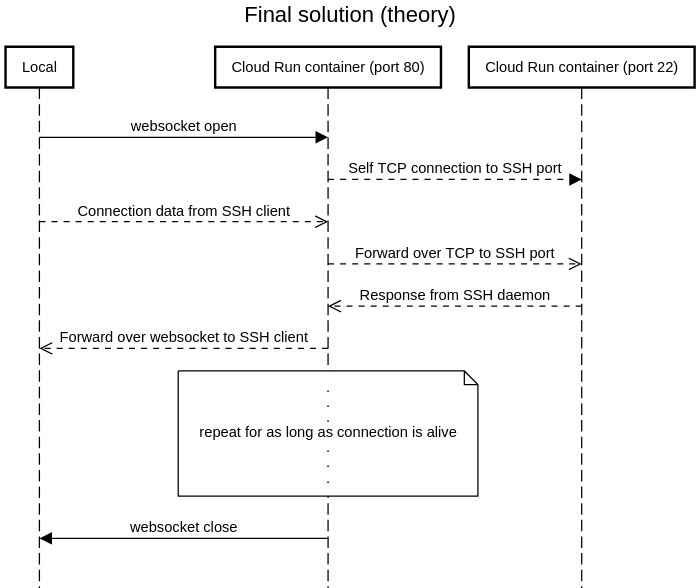

Clearly the above solution is unacceptable for many use-cases. Interestingly Cloud Run also supports long lived websocket connections. Websockets are quite similar to TCP websockets in the sense that they are long lived connections used to send arbitrary data with low overhead.

Of course since websockets are based on TCP there will definitely be some overhead, due to the protocol in use, but we can squabble over those numbers later. Theorectically, it should be possible to use a websocket connection as a proxy for the data originally meant to be sent over TCP sockets (as in case of SSH).

Now, when I first did this, I wrote a Go program for both the client side and

the server side proxies. However going into those details would make this

article too long. For demonstration purposes we are going to use a combination

of websocat and netcat - the UNIX tools for dealing with websocket

connections and TCP connections respectively to mimic the same functionality.

On server side

websocket 8080 -> TCP 22

$ websocat --binary -s 8080 | netcat 127.0.0.1 22

But we want the response from netcat to be fed back into websocat. For this we can used named pipes.

$ mkfifo pipe1

$ websocat --binary -s 8080 < pipe1 | netcat 127.0.0.1 22 > pipe1

Actually I also found out that netcat is redundant too since websocat supports tcp natively. This makes the command even simpler:

websocat --binary ws-l:0.0.0.0:8080 tcp:127.0.0.1:22

On the client side

On the client side, we could have the similar kind of proxy using both websocat and netcat, however a simpler option is to use OpenSSH client's ProxyCommand flag. This allows the communication to happen over stdin and stdout of a back command, which is exactly what we want.

ssh -o ProxyCommand='websocat --binary wss://<ervice url>' root@anything

Full working example

You can use this Dockerfile to generate a Cloud Run service to which you can SSH:

FROM alpine:latest

RUN apk update && apk add websocat openssh && \

( echo 'root:root' | chpasswd ) && \

sed -i 's|#PermitRootLogin|PermitRootLogin yes\n\0|g' \

/etc/ssh/sshd_config && \

cat /etc/ssh/sshd_config

CMD ( cd /etc/ssh && ssh-keygen -A ) && \

/usr/sbin/sshd -f /etc/ssh/sshd_config && \

websocat --binary ws-l:0.0.0.0:8080 tcp:127.0.0.1:22

This Dockerfile uses a barebones alpine image, but you could use a beefy image

with a lot of tools that you might need in your temporary computation machine.

It installs websocat and openssh, enables root SSH login and sets the root

password to root.

Put this Dockerfile in an empty directory and deploy this using:

$ gcloud alpha run deploy alpine-ssh --platform managed \

--execution-environment gen2 --source .

You can SSH to the resulting image using:

$ ssh -o ProxyCommand='websocat --binary wss://alpine-ssh-AAAAAAAAAA.a.run.app' root@host

(use root when prompted for password). SUCCESS!

Notes:

- Cloud Run containers are ephermal. This means that any data that you have locally on the container will be destroyed after a short period of inactivity (~15min).

- You may want to set the max scaling instances to 1, to make sure all logins during the same period hit the same container instance. Otherwise, if the container scales, there is no guarantee that two SSH sessions will connect to the same container.

- Being able to SSH to cloud run also opens up a host of possiblities due to the existence of port forwarding (which also works in our current implementation). For instance, this could mean that you might use cloud run hostings as your own private (around-the-world) VPN.